Stop Flying Blind with AI Prompts

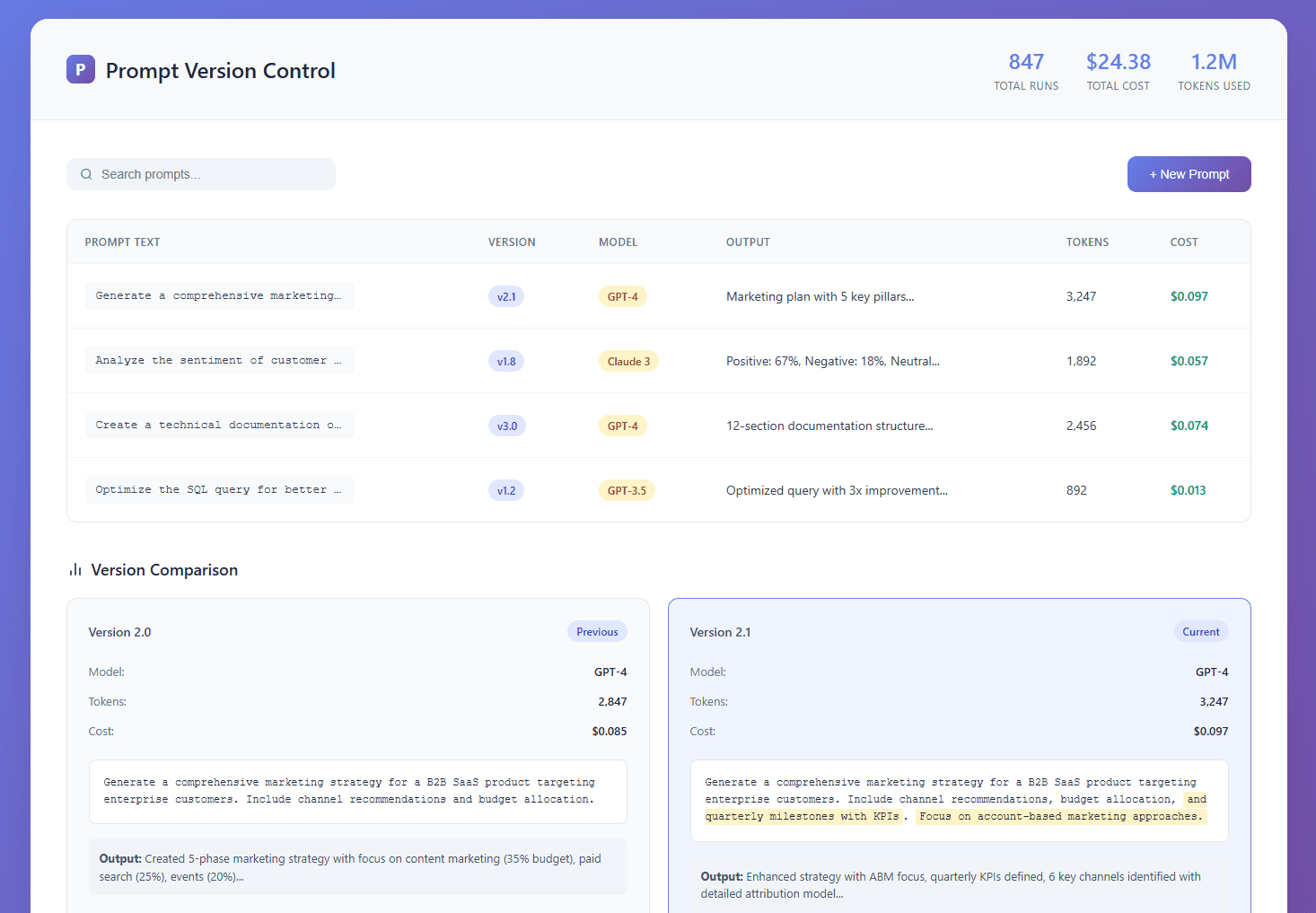

PromptPilot helps developers version, track, and optimize AI prompts with full cost visibility. Know exactly what changed, when it changed, and how much it costs.

The Problem

Prompt Chaos

You're tweaking prompts across multiple files, Slack messages, and Google Docs. No version control. No way to know what worked last week. No diff to compare changes.

Cost Blindness

Your OpenAI bill keeps climbing, but you have no idea which prompts are burning through tokens. No per-prompt cost breakdown. No way to optimize spending.

Built for Developers

Git-Like Versioning

Every prompt change is tracked automatically. View diffs, rollback to previous versions, and branch prompts for A/B testing. Your prompt history is your new superpower.

Token & Cost Analytics

See real-time token counts and costs per prompt. Track spending trends over time. Identify expensive prompts instantly and optimize before they blow your budget.

Side-by-Side Comparison

Compare prompt versions side-by-side with output quality metrics and cost differences. Make data-driven decisions about which prompts perform best.

Team Collaboration

Share prompts with your team, leave comments on versions, and track who changed what. No more prompt knowledge living in one person's head.

Drop-In Integration

Simple SDK that wraps your existing OpenAI, Anthropic, or custom LLM calls. Add one line of code and start tracking immediately. No infrastructure changes needed.

Performance Metrics

Track latency, success rates, and output quality scores. Correlate prompt changes with performance improvements. Ship better AI features faster.

Ready to take control of your AI prompts?

Be among the first developers to ship better AI features with PromptPilot.

Beta starting Q4 2025